Not All Context Is Created Equal: Why Ontologies Matter

Ontologies are having a moment. And here’s why: AI can’t function well without the right context.

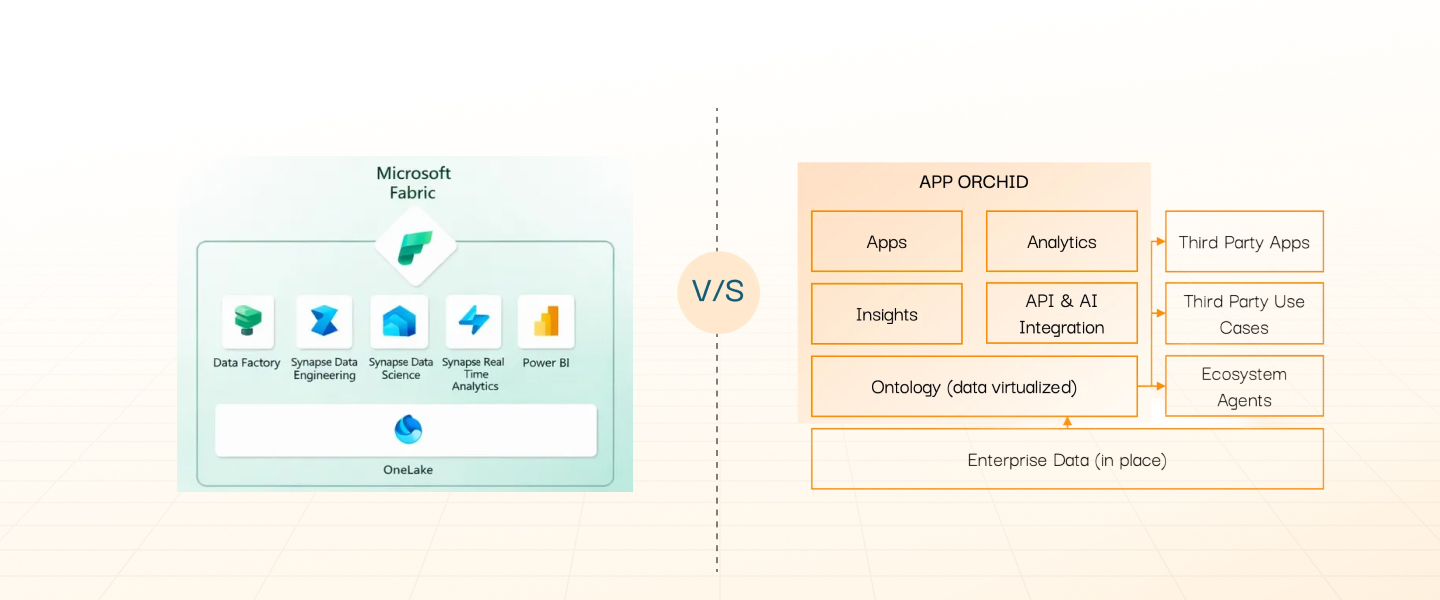

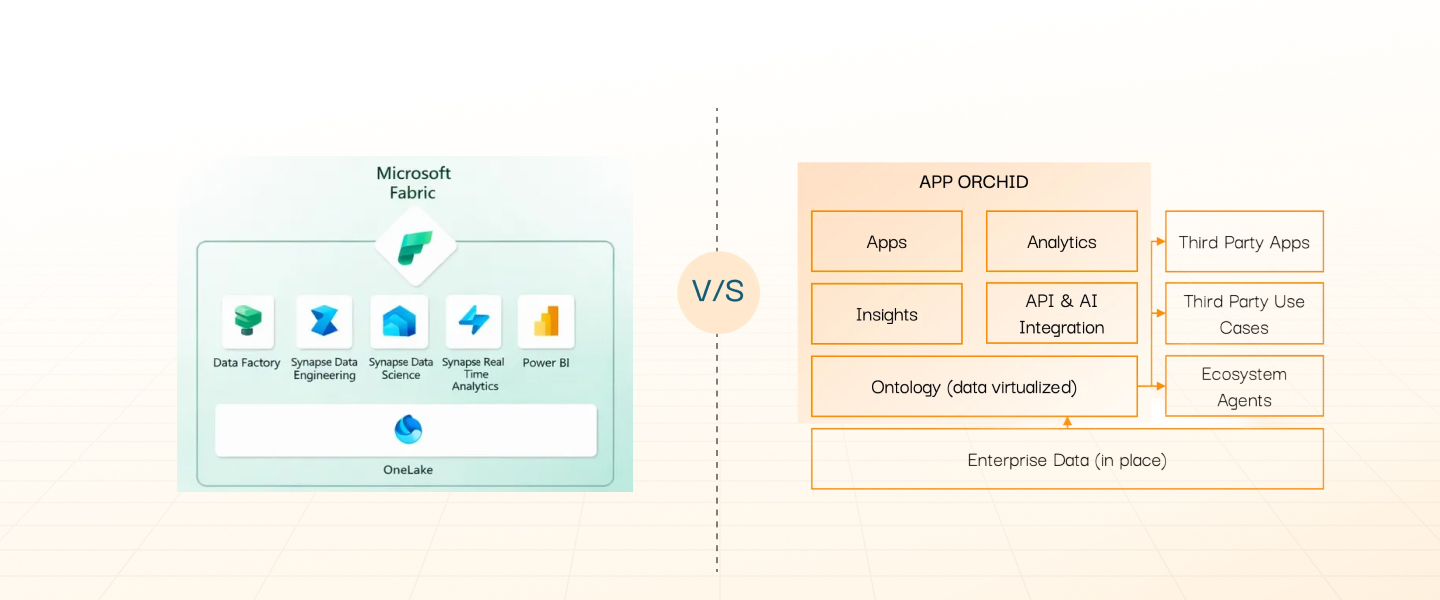

Microsoft is another technology provider to put a spotlight on the issue with the announcement of Fabric IQ, a new semantic intelligence layer designed to give enterprises a shared, business-level understanding of their data. It’s an approach that mirrors what we’ve been building for years at App Orchid with our own Semantic Layer.

Microsoft isn’t alone.

Databricks, Tableau, and others are moving in the same direction. The momentum is clear: the industry is finally recognizing that high-quality context is the missing ingredient in enterprise AI, and ontology based semantic layers are the best way to deliver it.

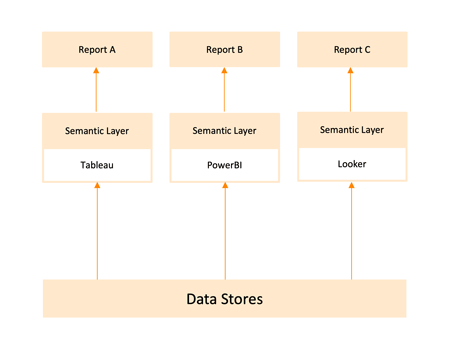

The challenge with existing semantic layers

In many organizations, the notion of a “semantic layer” has long meant a translation or presentation layer above the data warehouse or lakehouse, something that abstracts table names, field codes, and technical logic into business-friendly terms, metrics and relationships. In the era of AI, semantic layers take on a new role and are now an extremely valuable tool to ground LLMs in enterprise data for better answers and agentic behaviors.

We see two major approaches to these legacy semantic layers.

1. Semantic layers embedded in BI tools.

Many BI platforms (for example, PowerBI or Looker) include a semantic layer that is tightly coupled to that tool. The business metric definitions, join logic and hierarchies live inside the BI tool’s own model (or engine). That coupling creates some drawbacks:

- Each BI tool has its own semantics, so definitions may diverge between tools.

- It is hard to reuse the same semantics in other contexts (data science, AI, custom apps, etc.).

- It can lead to vendor lock-in: once you build all your logic inside one BI tool, switching or integrating becomes expensive.

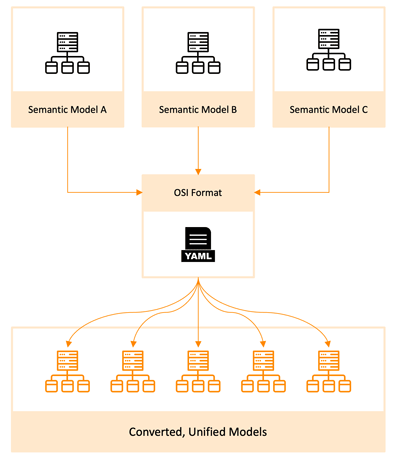

2. Semantic layers built via mark-up or configuration (YAML, LookML, etc).

.png)

More modern semantic-layer initiatives still rely on declarative modelling (e.g., YAML or proprietary modelling languages) to define dimensions, measures, hierarchies and relationships. But this also creates practical challenges:

- Manual modelling effort: semantics must be defined, maintained, versioned as code. Adding data or changing definitions can be a big effort.

- Drift and duplication: when multiple teams build semantics (for dashboards, for AI, for queries), definitions diverge.

- Weak support for unstructured data or conversational-agent use-cases: the business logic is geared to tabular data, not to agents, context, natural language, or multimodal data.

While the semantic-layer concept is sound, many implementations still struggle with consistency, re-use, governance, cross-tool interoperability and readiness for AI or conversational agents.

The good news is that the industry now fully recognizes these challenges and has introduced a partial solution: the Open Semantic Interchange.

The Open Semantic Interchange…A partial measure?

In 2025 we saw a major industry initiative: the Open Semantic Interchange (OSI). Partners such as Snowflake, Salesforce, dbt Labs, Alation and others are publicly committed to a vendor-neutral, open-source specification for semantic metadata.

Why is this significant? A few key motivations:

- Interoperability: A single semantic definition should be usable by BI dashboards, SQL queries, notebooks, AI/LLM-driven agents, analytics products. OSI seeks to enable that reuse rather than rebuilding semantics in each tool.

- Governance & trust: Inconsistent metric definitions between teams and tools erode confidence in analytics and AI. OSI aims to make business logic portable, consistent, and auditable.

- Avoiding vendor lock-in: If semantics are trapped inside one BI tool (or one modelling language), organizations lose agility. A neutral interchange layer allows best-of-breed components to coexist.

For decision-makers, OSI signals a turning point: semantic layers are moving from tool-specific abstractions to interoperable infrastructure for analytics and AI.

Many questions remain. How will older standards interoperate with newer approaches to storing semantics and context? How do these code first approaches adapt to changing definitions and context requirements based on role, use case or domain?

Major players now admit existing tools fall short for context engineering.

- Looker (Google Cloud) — In its blog “How Looker’s semantic layer enhances gen AI trustworthiness,” Google announced opening up Looker’s semantic layer — enabling LookML-defined models to be used outside Looker (via JDBC, connectors to Tableau, etc).

- Unity Catalog from Databricks — At the 2025 Data + AI Summit, Databricks announced “Unity Catalog Metrics: One semantic layer for all data and AI workloads.” They highlight that defining metrics at the data layer (rather than only at BI surfaces) enables reuse across dashboards, notebooks, AI agents. The underlying guidance emphasizes that business metrics, synonyms, dimensions, relationships are now first-class assets in Unity Catalog.

- Tableau jumped in with Tableau Semantics, pitching an "AI-infused" layer that uses LLMs to enrich data descriptions and build definitions..

These announcements underline a pattern: semantic layers are evolving from “nice to have” abstractions in BI, to foundational blocks for AI, analytics, governance and enterprise data fabrics.

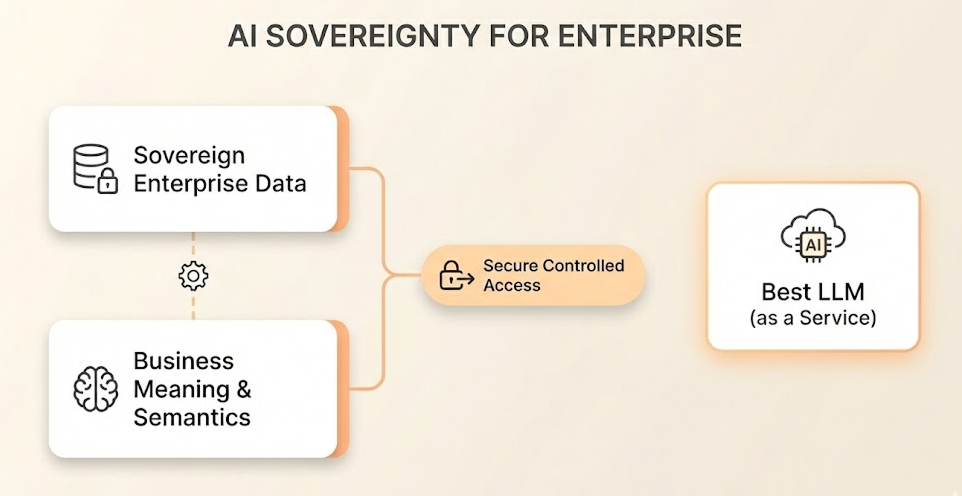

Why ontologies matter for agents, AI and context engineering

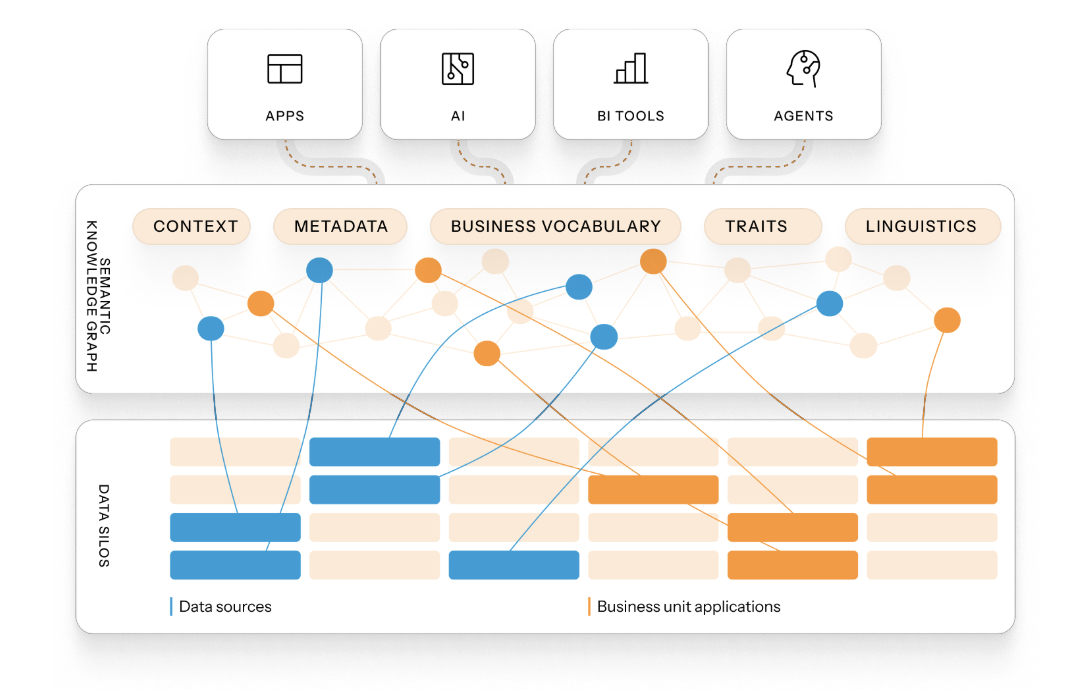

Major players are realizing that response accuracy depends on the degree to which data and semantics are grounded in an ontology, a structured model of business domain concepts, relationships, properties, and rules.

Why does this matter?

- Context at scale: Agents and AI require more than “table X join table Y to get revenue.” They require business knowledge: what is a customer, what is churn, how subscriptions relate to products, what “region” means in this business context. Ontologies encode this richer domain of knowledge, enabling more accurate, relevant answers.

- Unstructured & structured data: Many semantic-layer approaches focus solely on structured tabular data (columns, tables, joins). But if you want to incorporate text, logs, events, streaming data, conversational queries or knowledge graphs, you need richer modelling. Ontologies bridge structured + unstructured, enabling context engineering, semantic reasoning and bridging AI agents with analytic pipelines.

- Agent readiness and semantic inference: For agents (LLMs, conversational bots) to act reliably, they must operate on well-defined business logic and semantics. For example, when the bot is asked “what are expected renewals next quarter?”, the semantics layer must know how to compute that from underlying data but also understand the business term “renewal” and the time-period semantics. Ontologies enable this reasoning. As seen with Palantir and other platforms, domain ontologies anchor operational and analytical systems to meaningful domain processes.

The Microsoft Fabric IQ announcement, for example, telegraphs teaching AI agents to understand business operations, not just fetch raw data. That capability only works if the semantics layer is highly enriched and structured as an ontology.

For the AI era, legacy semantic layers must evolve into semantic knowledge layers with ontologies that provide a legible, maintainable, and extendable structure.

The App Orchid semantic layer: an ontology-first approach with real-world success

App Orchid delivers a uniquely capable semantic layer for modern organizations. Here are some of the differentiators and development history:

Proven deployment

We launched our knowledge graph-based semantic layer eight years ago. It was initially focused on applications. With the introduction of ChatGPT, we launched Easy Answers, conversational analytics for enterprises that needed to query both structured data (warehouses, lakes) and unstructured data (text, documents, logs). Since then, we have refined our ontological approach, broken silos, and discovered millions of relationships to answer questions and validated in production environments how our semantic layer accelerates analytics, dashboards, and agent-based use-cases. Our references include enterprises in finance, hospitality, and industrial operations.

Ontology-driven, covering structured + unstructured data

Our fundamental architecture differs from purely table/column or YAML semantic models. We discover a core ontology of business concepts (customers, products, contracts, renewals, events, channels, sentiment) plus relationships, vocabulary and domain context. This ontology maps downward into both structured data (tables, fields) and unstructured sources (documents, logs, chat transcripts). Because of this hybrid capability, our clients ask questions like: “Show me customer churn risk this quarter, including contract billing discrepancies and support ticket patterns.”

Such queries require semantics beyond simple column joins; they require domain reasoning and entity relationships.

Automatic discovery, minimal manual effort

We recognize the pain of manual semantic modelling. At App Orchid we embed semi-automated discovery: our system ingests metadata, schema, document taxonomies and uses entity extraction and relationship-mining to suggest ontology extensions, mappings and more. LLMs are leveraged to generate descriptions, meta data, and context. A human in the loop validates all suggestions. Typically, more than 90% of the ontology and context are auto-discovered in our engagements; the remaining manual modelling is domain-specific tuning and business-user refinement. That significantly reduces time-to-value.

Accuracy, governance and agent readiness

Because our semantic layer is ontology-backed and governed, we deliver built-in auditing, versioning, and direct integration into conversational agents and analytics pipelines. Business users, data scientists, and AI agents all refer to the same definitions. That means one “churn” metric, one “renewal” definition, one “customer” concept, consistently computed whether in dashboard, notebook or agent query. That alignment is exactly what the market is demanding, especially with the rise of OSI and unified semantic standards.

Why this matters for you, the decision-maker

- You get semantic reuse: model once, serve everything.

- You get agent-ready semantics: your conversational or AI apps use the same logic as dashboards.

- You reduce semantic drift: fewer definitions floating around different tools.

- You support both structured and unstructured data, enabling broader analytics use-cases.

- You are prepared for open semantic standards (e.g., OSI) by adopting a future-friendly semantic-layer architecture.

Conclusion

Revise option: A new category of semantic layers is emerging as AI demands deeper meaning. For many years semantic layers lived within BI tools, with variable governance, limited reuse, and high manual effort. The rise of AI, conversational analytics, and agentic workflows has exposed the limitations of those architectures. Major vendors are racing to build semantic-layer capabilities (own models, catalog-driven semantics, unified metrics) and organizations are demanding “one version of the truth” across dashboards, notebooks, AI agents and apps.

OSI initiative solves part of the problem - semantics must be interoperable, governance-driven and usable beyond one tool. And as we’ve seen from Microsoft and Palantir, ontologies are the future that solves the other – dynamism and adaptability.

In this context, App Orchid offers a compelling alternative: an ontology-based semantic layer that spans structured and unstructured data, requires minimal manual modelling, supports governable reuse and is designed for AI and agent use-cases. For organizations looking to scale analytics, move into conversational and generative-AI workflows, and avoid semantic chaos, this approach is well aligned with the trajectory of the market.

Related articles

The Best Path to

AI-Ready Data

Experience a future where data and employees interact seamlessly, with App Orchid.